Would you believe “Vibe Health” could make you a life-saving doctor? Would you bet on “Vibe Building” turning you into a skyscraper-raising civil engineer? Would you subscribe to “Vibe Law” expecting to walk into court and win?

If your answer is yes, I rest my case—and good luck with the lawsuits. If your answer is no, then you already see where I’m going. Why reject those fantasies, yet entertain the idea that “Vibe Coding” can magically transform anyone into a software engineer?

Yes, we’ve all seen the demo: someone types a few sentences, and an app pops out magically. It’s inebriating. I’t exhilarating. Executives from Microsoft to Coinbase swear AI is already writing “huge chunks” of production code. Mark Zuckerberg has been “12 months away” from AI-written code for three years running. Dario Amodei, the ever-present, ever-cited, ever-hyperbolic CEO of Anthropic has promised in cycles of 90 days, then six months, then a year, that Claude would replace engineers wholesale. The promise of “vibe coding” is to turn every employee into a software engineer, building complex systems with the ease of a conversation.

It’s a powerful narrative, but once that initial “wow” factor fades, many of us are waking up to a serious headache. When vibe-coded projects are pushed towards production, they often decay into what Jack Zante, senior engineer at PayPal, aptly calls “development hell”. The initial burst of speed quickly gets bogged down by a mountain of technical debt, security flaws, and architectural chaos: a prototype hacked together by a single user — even a non-technical one — might run beautifully for one or two people, giving its creator a powerful hit of oxytocin. But scaling it to 50, 200, or 6,000 users (let alone hundreds of thousands) quickly exposes the cracks – poor architecture strangles scalability, security gaps leak sensitive data, design shortcuts wreck the user experience under real-world load.. And what initially looked magical becomes painfully brittle.

As Java creator James Gosling bluntly put it, as soon as a vibe-coded project gets even slightly complicated, it can “pretty much always blow their brains out”. He argues it’s simply “not ready for the enterprise because in the enterprise, software has to work every [expletive] time”

Let’s cut the marketing and the greedy-VCs, drooling-investors impressing hyperboles (hello, Mr. Amodei) and talk shop. Why does this conversational approach, so impressive in demos, consistently fail the rigorous demands of production-grade software? The reasons are not superficial; they are baked into the very foundation of the technology.

The Original Sin: Ambiguity and Bad Data

The first problem: AI models train on oceans of public code ranging from public GitHub repositories to student projects, half-finished experiments, quick-fix Stack Overflow snippets. That foundation is inherently shaky. When you train a model on a foundation of mediocre code, you can’t be surprised when it generates more of the same. Garbage in, garbage out.

The second, more fundamental issue is the interface itself: natural language. Programming languages are formal systems designed for precision and to eliminate ambiguity, and English is ambiguity incarnate. “Get two pints of milk, and if they have eggs, get 12” is a famously unclear instruction. A compiler, however, parses if (x > 5) one way and one way only.

Renowned Computer Scientist Edsger Dijkstra argued in a famous paper that the ambiguity of natural language makes it fundamentally unsuited for the precise, rigorous task of programming. He believed that using a formal language was a necessary restriction on our freedom of expression to avoid building a “Tower of Babel” (but, of course, you’d need to be a software engineer to be aware of that paper and of what it means).

Vibe coding attempts to reverse this, and the results are predictably unstable.

The Big Picture Blind Spot: Architectural Collapse by a Thousand Prompts

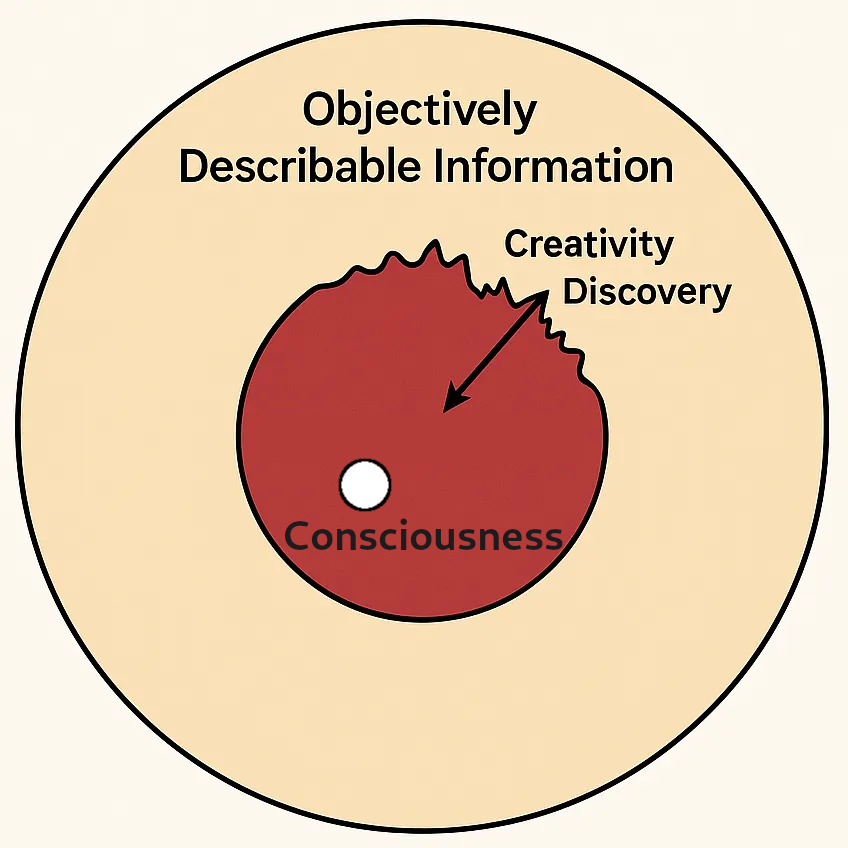

The most significant technical failures of vibe coding stem from the AI’s inability to maintain a coherent, long-term architectural vision. Models don’t “see ahead”; they react to the immediate prompt within a limited context. This leads to a cascade of predictable and costly problems.

- The Context Window is Your Enemy: An AI’s memory is finite. As your project grows and the chat history fills the context window, the quality of the output degrades significantly. The model starts to “forget” earlier decisions, leading to inconsistencies and regressions. Starting a new session to clear the slate might seem like a fix, but it often erases crucial context, forcing you to re-explain the entire system from scratch. Coding assistants like Claude Code, Gemini CLI and OpenAI Codex seem to magically pick up from where you have left, but while they warm up again to the project as a whole they might loose track of the bigger picture and create regressions and duplication.

- Business Logic and Data Model Drift: Without a holistic view, an AI will solve the same problem in multiple ways across the codebase. Developers’ experience is that if a feature is needed in five places, the AI would build it five different times in five different ways, instead of creating a single, reusable component. This leads to a nightmare of scattered business rules, and if later you need to modify that rule the AI has to look in 5 different places. Similarly, this step-by-step approach wreaks havoc on the data model, often resulting in unnormalized databases, update/deletion anomalies, and inefficient joins that a non-technical user would never spot. To avoid these problems you’d need to properly instruct and direct the AI on the basis of solid engineering knowledge and proper software design, which you can’t expect a casual user to have.

- Inefficient Code and Performance Bottlenecks: AI-generated code is often functional but not necessarily optimised. It can (and will) produce inefficient algorithms or overlooks performance bottlenecks that require experienced engineering eyes to detect and fix. The initial time saved in generation is often spent later, profiling and refactoring the AI’s clunky implementation.

- Insecure Code: Beyond the maintenance headaches, there’s a far more immediate danger: a flood of severe security vulnerabilities, and the consequences are alarming. A recent Veracode study found that a staggering 45% of AI-generated code contained a critical OWASP Top 10 vulnerability. We’re seeing a resurgence of classic blunders like hardcoded secrets—API keys and passwords baked directly into the source code—and insecure defaults that leave systems wide open. This is already leading to catastrophic failures: in one now-infamous incident, a startup founder watched in horror as Replit wiped out their entire production database with a single, unreviewed command. In other cases, insecure vibe-coded apps have leaked thousands of user emails and passwords. The downstream effects are exactly what keep CISOs up at night: personal data theft, identity theft, credit cards leaks and the inevitable legal and reputational fallout that follows a major breach.

- The Illusion of Automated Tests: You might ask the AI to write tests, but this often creates a broken feedback loop. The model will frequently write production code first and then retrofit tests to match it, even if the code is flawed. A more effective guardrail is to take control: you define the critical tests, particularly for the core business logic. Again, this requires the engineering knowledge to understand critical business logic paths, create the relevant guardrail tests and instruct the AI to do the proper checks at every major refactor.

Death by a Thousand Papercuts: The Development Loop Nightmares

Beyond architecture, the daily process of working with these tools is fraught with practical issues that stall progress and introduce risk.

- Opinionated Platforms and Loss of Control: Platforms like Replit, while powerful, are highly opinionated. They often lock you into a specific stack (like Node.js), their own component libraries, and integrated cloud deployments. This severely limits your ability to make sound engineering choices about architecture, infrastructure, or cost optimisation. Debugging becomes a frustrating exercise in fighting a black box.

- Rate Limiting and Inconsistent States: The computational cost of these AI models is high, and it’s not uncommon to hit usage quotas mid-task, especially during complex operations. When an agent stops abruptly, it can leave the codebase in a broken, inconsistent state, forcing you to manually untangle a half-finished job.

This accumulation of small and large failures has given rise to a new, half-joking profession: the “vibe coding cleanup specialist”—an engineer paid to fix the messes left behind by others. Experienced programmers find themselves acting as “AI babysitters,” spending as much as 30-40% of their time on “vibe fixing“—rewriting bugs, untangling logic, and paying down the massive technical debt incurred by the initial “speed” of AI generation.

Vibe coding is here now, and its ability to accelerate the creation of mock-ups and proofs-of-concept is surely valuable. But the line between a quick prototype and a robust, maintainable, production-grade system is one that these tools cannot yet cross on their own, unless the likes of Microsoft, Facebook, Google, accept to release training datasets based on their own battle-tested, secure and production ready software. I can’t see that happening any time soon.

The future is AI-assisted, but it still requires human discipline, architectural foresight, and the hard-won wisdom of real-world engineering.

Unless, of course, you really believed that “Vibe Health” can make you a doctor, “Vibe Building” a civil engineer, or “Vibe Law” a trial-winning attorney.